capablanca has been my primary file system for the better part of 7 years. I bought the parts in 2013 and it’s been serving files faithfully ever since. I had a bit of a scare with it when I almost lost its zpools, and back when it was using 2TB Western Digital Green drives there were two drive failures within the space of two weeks that would have destroyed my data if I didn’t rotate in new ones quickly, but other than that everything’s been smooth.

I’ve been hosting OwnCloud for family members on a VPS for a while, and a few years ago that instance ran out of disk space and I decided to migrate the set of containers to a physical machine at my house. I cobbled together a new Linux system (out of parts I had laying around) that I named lasker that used a mirrored set of 2TB disks I had that weren’t being used, and moved OwnCloud there. A little bit of ssh forwarding later and users wouldn’t notice a difference - the VPS now only ran nginx and took care of the public certificate renewals.

However now I was running two machines at my house where I could be running one. I couldn’t run the docker containers on my FreeBSD machine easily and didn’t want to take the deep dive into a different tech like bhyve or jails (although I did spent a few cycles on trying to get jails to work).

After 7 years, I decided it was time for a new file server. Migrating away from capablanca and lasker, my goals were:

Linux

capablanca runs ZFS because it is one of the best file systems out there, and an excellent choice for a file server. openzfs/zfs is the de-facto implementation of ZFS on Linux and FreeBSD, so there’s no clear reason to run FreeBSD for it anymore. I would also need docker to move services from lasker.

Lower power consumption than capablanca but a more powerful machine

Machines have evolved quite a bit hardware wise over the last 7 years, and I wanted to take advantage of that. Even though the new file server wouldn’t be doing much, a faster processor combined with faster RAM would be felt, and especially so because the plan was to host services there too.

So at the beginning of the year, before all the madness, I bought parts for a new server, appropriately named tempfile:

All together, about $1000 CAD.

It luckily all arrived in January, and I had it before shipping began to be an issue.

The first part of this plan was to burn in all the new hardware. For that, the standard set of memtest, mprime, and badblocks were used.

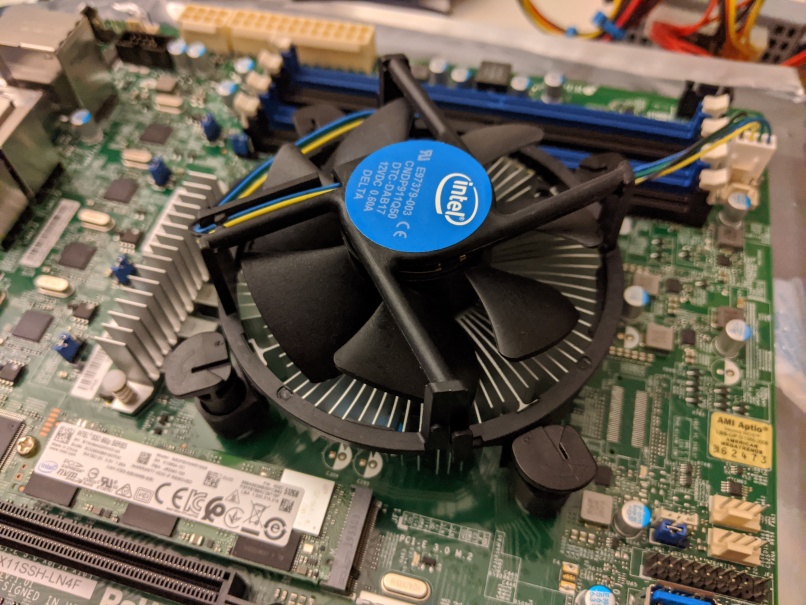

Unfortunately I don’t have many other assembly photos. Here’s what it looks like now:

It’s much easier to carry around, albeit a little cramped when installing everything.

After assembly, I had an empty tempfile and a full capablanca. The question was: how to migrate disks from capablanca to tempfile while preserving the data?

It’s important to note that the desired end state was going to to be two disk mirrors in tempfile, not three disk mirrors. Before any of this, I signed up for Backblaze B2 and backed up all important files there with restic. Until now I hadn’t set up any offsite backup - alekhine long ago lost the disk capacity to be a mirror of my primary file server. Two local copies plus a third offsite is safer than three local copies from a few scenarios, namely:

Before attempting any disk shuffling, backup!

For reference, capablanca’s zpool layout looked like this:

NAME STATE READ WRITE CKSUM

archive ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gpt/R6G9PUEYp1.eli ONLINE 0 0 0

gpt/VJGLNBRXp1.eli ONLINE 0 0 0

gpt/VJGLHZAXp1.eli ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gpt/ZCT0EM3Yp1.eli ONLINE 0 0 0

gpt/ZCT0E990p1.eli ONLINE 0 0 0

gpt/7SHXS3BWp1.eli ONLINE 0 0 0

I peeled off a disk from each mirror:

root@capablanca:/home/jwm # zpool detach archive gpt/R6G9PUEYp1.eli

root@capablanca:/home/jwm # zpool detach archive gpt/ZCT0EM3Yp1.eli

Afterwards, capablanca’s zpool layout looked like:

NAME STATE READ WRITE CKSUM

archive ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gpt/VJGLNBRXp1.eli ONLINE 0 0 0

gpt/VJGLHZAXp1.eli ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gpt/ZCT0E990p1.eli ONLINE 0 0 0

gpt/7SHXS3BWp1.eli ONLINE 0 0 0

Those two disks (R6G9PUEY and ZCT0EM3Y) went into tempfile, along with 2TB disks that I had laying around. In total, tempfile’s capacity started at 8+2+2 = 10TB:

NAME STATE READ WRITE CKSUM

pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-HGST_HDN728080ALE604_R6G9PUEY ONLINE 0 0 0

ata-ST8000DM004-2CX188_ZCT0EM3Y ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

ata-WDC_WD20EARS-00MVWB0_WD-WMAZA0686209 ONLINE 0 0 0

ata-WDC_WD20EFRX-68AX9N0_WD-WMC300563174 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

ata-WDC_WD20EFRX-68AX9N0_WD-WMC300578369 ONLINE 0 0 0

ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M2UHLZ54 ONLINE 0 0 0

(Note: ZCT0EM3Y is a Seagate ST8000DM004 (Barracuda Compute) drive, identified as an SMR drive and removed later. When the data was migrated to tempfile, capablanca performed the benchmarks that went into that post)

Note: instead of using LUKS encryption, I decided to use openzfs’s native encryption. Here are the commands that created the pool above:

root@tempfile:~# apt update

root@tempfile:~# apt install linux-headers-`uname -r`

root@tempfile:~# apt install -t buster-backports dkms spl-dkms

root@tempfile:~# apt install -t buster-backports zfs-dkms zfsutils-linux

root@tempfile:~# reboot

root@tempfile:~# zpool create -o ashift=12 -o autoexpand=on -m none \

pool \

mirror /dev/disk/by-id/ata-HGST_HDN728080ALE604_R6G9PUEY /dev/disk/by-id/ata-ST8000DM004-2CX188_ZCT0EM3Y \

mirror /dev/disk/by-id/ata-WDC_WD20EARS-00MVWB0_WD-WMAZA0686209 /dev/disk/by-id/ata-WDC_WD20EFRX-68AX9N0_WD-WMC300563174 \

mirror /dev/disk/by-id/ata-WDC_WD20EFRX-68AX9N0_WD-WMC300578369 /dev/disk/by-id/ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M2UHLZ54

root@tempfile:~# zpool set feature@encryption=enabled pool

root@tempfile:~# zfs create -o keyformat=passphrase -o encryption=aes-256-gcm -o mountpoint=/secret pool/secret

Enter passphrase:

Re-enter passphrase:

root@tempfile:~# sudo zpool import -l pool

10TB was enough to copy over files from capablanca, a process that took a little over a day for the initial 6TB rsync.

After performing a few more tests, I bought two new WD Elements 8TB external drives, shucked them, burned them in with badblocks (monitoring for reallocated sectors or other backblaze related signals), and added finally them to tempfile’s zpool:

NAME STATE READ WRITE CKSUM

pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-HGST_HDN728080ALE604_R6G9PUEY ONLINE 0 0 0

ata-ST8000DM004-2CX188_ZCT0EM3Y ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

ata-WDC_WD20EARS-00MVWB0_WD-WMAZA0686209 ONLINE 0 0 0

ata-WDC_WD20EFRX-68AX9N0_WD-WMC300563174 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

ata-WDC_WD20EFRX-68AX9N0_WD-WMC300578369 ONLINE 0 0 0

ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M2UHLZ54 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

ata-WDC_WD80EMAZ-00WJTA0_1EHWA06Z ONLINE 0 0 0

ata-WDC_WD80EMAZ-00WJTA0_2SGARPHJ ONLINE 0 0 0

tempfile’s capacity was now 20TB, and the u-nas case was full.

But! the zpool was unbalanced:

$ sudo zpool list -v

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

pool 18.2T 6.85T 11.3T - - 0% 37% 1.00x ONLINE -

mirror 7.27T 4.57T 2.69T - - 0% 62.9% - ONLINE

ata-HGST_HDN728080ALE604_R6G9PUEY - - - - - - - - ONLINE

ata-ST8000DM004-2CX188_ZCT0EM3Y - - - - - - - - ONLINE

mirror 1.81T 1.13T 702G - - 0% 62.2% - ONLINE

ata-WDC_WD20EARS-00MVWB0_WD-WMAZA0686209 - - - - - - - - ONLINE

ata-WDC_WD20EFRX-68AX9N0_WD-WMC300563174 - - - - - - - - ONLINE

mirror 1.81T 1.15T 675G - - 0% 63.6% - ONLINE

ata-WDC_WD20EFRX-68AX9N0_WD-WMC300578369 - - - - - - - - ONLINE

ata-WDC_WD20EFRX-68EUZN0_WD-WCC4M2UHLZ54 - - - - - - - - ONLINE

mirror 7.27T 196K 7.27T - - 0% 0.00% - ONLINE

ata-WDC_WD80EMAZ-00WJTA0_1EHWA06Z - - - - - - - - ONLINE

ata-WDC_WD80EMAZ-00WJTA0_2SGARPHJ - - - - - - - - ONLINE

Notice the CAP column: the new 8TB mirror shows 0.00%. An unbalanced pool is not great - ZFS tries to keep CAP approximately the same when writing files, and I’m speculating that the last mirror will not be used at all until new files are written. More speculation: when new files are written they will all write to the new mirror until the CAP percentages balance out, and I’ll lose the advantage of files spread across disks.

I wrote a tool to balance the vdev capacity percentage, and called it zfs_rebalance.py. There’s more information on the README there.

Once balanced, I copied everything from capablanca and lasker over to tempfile. I did this multiple times to confirm that there were no problems, and began deleting the sources. capablanca served files over nfs and samba, and that was relatively painless to migrate: clients had to be pointed to tempfile but everything else worked. lasker had to have containers migrated too, but after that both machines were decommissioned.

One note: with the u-nas case being full, disk swapping with the trays couldn’t happen, I had to use external USB HDD adapter. There’s a procedure for this:

sudo zpool exportsudo rm /dev/disk/by-id/wwn-* ; sudo zpool import -d /dev/disk/by-id/ -l poolStep 6 is the important one - without it, ZFS will import the disk named usb- instead of ata-.

Another interesting note: After rsync, I wanted to compare to see that the copy worked:

jwm@lasker:~$ sudo du -hs /tank/

107G /tank/

jwm@tempfile /secret [] [] $ sudo du -hs /secret/lasker_tank/

54G /secret/lasker_tank/

My response: wat. There’s no way that rsync is to blame, but just in case I ran it a few more times. rsync showed no changes or additional files transferred.

I learned that the problem here is that disk usage is not the same as the number of bytes that files occupy. Printing out the bytes used shows:

jwm@lasker:~$ sudo find /tank/ -type f -print0 | du --files0-from=- -bsc | tail -n1

55477554970 total

jwm@tempfile /secret [] [] $ sudo find lasker_tank/ -type f -print0 | du --files0-from=- -bsc | tail -n1

55477554970 total